Simon Willison's Weblog

フィード

Structured Context Engineering for File-Native Agentic Systems

Simon Willison's Weblog

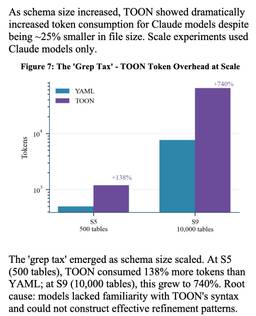

<p><strong><a href="https://arxiv.org/abs/2602.05447">Structured Context Engineering for File-Native Agentic Systems</a></strong></p>New paper by Damon McMillan exploring challenging LLM context tasks involving large SQL schemas (up to 10,000 tables) across different models and file formats:</p><blockquote><p>Using SQL generation as a proxy for programmatic agent operations, we present a systematic study of context engineering for structured data, comprising 9,649 experiments across 11 models, 4 formats (YAML, Markdown, JSON, Token-Oriented Object Notation [TOON]), and schemas ranging from 10 to 10,000 tables.</p></blockquote><p>Unsurprisingly, the biggest impact was the models themselves - with frontier models (Opus 4.5, GPT-5.2, Gemini 2.5 Pro) beating the leading open source models (DeepSeek V3.2, Kimi K2, Llama 4).</p><p>Those frontier models benefited from filesystem based context retrieval, but the open source models had much less convincing results with those, which reinforces

15時間前

AI Doesn’t Reduce Work—It Intensifies It

Simon Willison's Weblog

<p><strong><a href="https://hbr.org/2026/02/ai-doesnt-reduce-work-it-intensifies-it">AI Doesn’t Reduce Work—It Intensifies It</a></strong></p>Aruna Ranganathan and Xingqi Maggie Ye from Berkeley Haas School of Business report initial findings in the HBR from their April to December 2025 study of 200 employees at a "U.S.-based technology company".</p><p>This captures an effect I've been observing in my own work with LLMs: the productivity boost these things can provide is <em>exhausting</em>.</p><blockquote><p>AI introduced a new rhythm in which workers managed several active threads at once: manually writing code while AI generated an alternative version, running multiple agents in parallel, or reviving long-deferred tasks because AI could “handle them” in the background. They did this, in part, because they felt they had a “partner” that could help them move through their workload.</p><p>While this sense of having a “partner” enabled a feeling of momentum, the reality was a continual

1日前

Kākāpō mug by Karen James

Simon Willison's Weblog

<p>Friend and neighbour <a href="https://www.etsy.com/shop/KarenJamesMakes">Karen James</a> made me a Kākāpō mug. It has a charismatic Kākāpō, four Kākāpō chicks (in celebration of the <a href="https://simonwillison.net/2026/Jan/8/llm-predictions-for-2026/#1-year-k-k-p-parrots-will-have-an-outstanding-breeding-season">2026 breeding season</a>) and even has some <a href="https://www.theguardian.com/world/2026/jan/13/nz-kakapo-mating-season">rimu fruit</a>!</p><p><img src="https://static.simonwillison.net/static/2026/kakapo-mug-1.jpg" alt="A simply spectacular sgraffito ceramic mug with a bold, charismatic Kākāpō parrot taking up most of the visible space. It has a yellow beard and green feathers." style="max-width: 100%;" /></p><p><img src="https://static.simonwillison.net/static/2026/kakapo-mug-2.jpg" alt="Another side of the mug, two cute grey Kākāpō chicks are visible and three red rimu fruit that look like berries, one on the floor and two hanging from wiry branches." style="max-wi

2日前

Quoting Thomas Ptacek

Simon Willison's Weblog

<blockquote cite="https://twitter.com/tqbf/status/2019493645888462993"><p>People on the orange site are laughing at this, assuming it's just an ad and that there's nothing to it. Vulnerability researchers I talk to do not think this is a joke. As an erstwhile vuln researcher myself: do not bet against LLMs on this.</p><p><a href="https://www.axios.com/2026/02/05/anthropic-claude-opus-46-software-hunting">Axios: Anthropic's Claude Opus 4.6 uncovers 500 zero-day flaws in open-source</a></p><p>I think vulnerability research might be THE MOST LLM-amenable software engineering problem. Pattern-driven. Huge corpus of operational public patterns. Closed loops. Forward progress from stimulus/response tooling. Search problems.</p><p>Vulnerability research outcomes are in THE MODEL CARDS for frontier labs. Those companies have so much money they're literally distorting the economy. Money buys vuln research outcomes. Why would you think they were faking any of this?</p></blockquote><p class="cit

3日前

Vouch

Simon Willison's Weblog

<p><strong><a href="https://github.com/mitchellh/vouch">Vouch</a></strong></p>Mitchell Hashimoto's new system to help address the deluge of worthless AI-generated PRs faced by open source projects now that the friction involved in contributing has dropped so low.</p><p><a href="https://twitter.com/mitchellh/status/2020252149117313349">He says</a>:</p><blockquote><p>The idea is simple: Unvouched users can't contribute to your projects. Very bad users can be explicitly "denounced", effectively blocked. Users are vouched or denounced by contributors via GitHub issue or discussion comments or via the CLI.</p><p>Integration into GitHub is as simple as adopting the published GitHub actions. Done. Additionally, the system itself is generic to forges and not tied to GitHub in any way.</p><p>Who and how someone is vouched or denounced is up to the project. I'm not the value police for the world. Decide for yourself what works for your project and your community.</p></blockquote> <p>Tags: <a hr

3日前

Claude: Speed up responses with fast mode

Simon Willison's Weblog

<p><strong><a href="https://code.claude.com/docs/en/fast-mode">Claude: Speed up responses with fast mode</a></strong></p>New "research preview" from Anthropic today: you can now access a faster version of their frontier model Claude Opus 4.6 by typing <code>/fast</code> in Claude Code... but at a cost that's 6x the normal price.</p><p>Opus is usually $5/million input and $25/million output. The new fast mode is $30/million input and $150/million output!</p><p>There's a 50% discount until the end of February 16th, so only a 3x multiple (!) before then.</p><p>How much faster is it? The linked documentation doesn't say, but <a href="https://x.com/claudeai/status/2020207322124132504">on Twitter</a> Claude say:</p><blockquote><p>Our teams have been building with a 2.5x-faster version of Claude Opus 4.6.</p><p>We’re now making it available as an early experiment via Claude Code and our API.</p></blockquote><p>Claude Opus 4.5 had a context limit of 200,000 tokens. 4.6 has an option to increa

3日前

Quoting David Crawshaw

Simon Willison's Weblog

<blockquote cite="https://crawshaw.io/blog/eight-more-months-of-agents"><p>I am having more fun programming than I ever have, because so many more of the programs I wish I could find the time to write actually exist. I wish I could share this joy with the people who are fearful about the changes agents are bringing. The fear itself I understand, I have fear more broadly about what the end-game is for intelligence on tap in our society. But in the limited domain of writing computer programs these tools have brought so much exploration and joy to my work.</p></blockquote><p class="cite">— <a href="https://crawshaw.io/blog/eight-more-months-of-agents">David Crawshaw</a>, Eight more months of agents</p> <p>Tags: <a href="https://simonwillison.net/tags/coding-agents">coding-agents</a>, <a href="https://simonwillison.net/tags/ai-assisted-programming">ai-assisted-programming</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.ne

3日前

How StrongDM's AI team build serious software without even looking at the code

Simon Willison's Weblog

<p>Last week <a href="https://simonwillison.net/2026/Jan/28/the-five-levels/">I hinted at</a> a demo I had seen from a team implementing what Dan Shapiro called <a href="https://www.danshapiro.com/blog/2026/01/the-five-levels-from-spicy-autocomplete-to-the-software-factory/">the Dark Factory</a> level of AI adoption, where no human even looks at the code the coding agents are producing. That team was part of StrongDM, and they've just shared the first public description of how they are working in <a href="https://factory.strongdm.ai">Software Factories and the Agentic Moment</a>:</p><blockquote><p>We built a <strong>Software Factory</strong>: non-interactive development where specs + scenarios drive agents that write code, run harnesses, and converge without human review. [...]</p><p>In kōan or mantra form:</p><ul><li>Why am I doing this? (implied: the model should be doing this instead)</li></ul><p>In rule form:</p><ul><li>Code <strong>must not be</strong> written by humans</li><li>C

3日前

Quoting Tom Dale

Simon Willison's Weblog

<blockquote cite="https://twitter.com/tomdale/status/2019828626972131441"><p>I don't know why this week became the tipping point, but nearly every software engineer I've talked to is experiencing some degree of mental health crisis.</p><p>[...] Many people assuming I meant job loss anxiety but that's just one presentation. I'm seeing near-manic episodes triggered by watching software shift from scarce to abundant. Compulsive behaviors around agent usage. Dissociative awe at the temporal compression of change. It's not fear necessarily just the cognitive overload from living in an inflection point.</p></blockquote><p class="cite">— <a href="https://twitter.com/tomdale/status/2019828626972131441">Tom Dale</a></p> <p>Tags: <a href="https://simonwillison.net/tags/ai-ethics">ai-ethics</a>, <a href="https://simonwillison.net/tags/careers">careers</a>, <a href="https://simonwillison.net/tags/coding-agents">coding-agents</a>, <a href="https://simonwillison.net/tags/generative-ai">genera

4日前

Running Pydantic's Monty Rust sandboxed Python subset in WebAssembly

Simon Willison's Weblog

<p>There's a jargon-filled headline for you! Everyone's <a href="https://simonwillison.net/2026/Jan/8/llm-predictions-for-2026/#1-year-we-re-finally-going-to-solve-sandboxing">building sandboxes</a> for running untrusted code right now, and Pydantic's latest attempt, <a href="https://github.com/pydantic/monty">Monty</a>, provides a custom Python-like language (a subset of Python) in Rust and makes it available as both a Rust library and a Python package. I got it working in WebAssembly, providing a sandbox-in-a-sandbox.</p><p>Here's <a href="https://github.com/pydantic/monty">how they describe Monty</a>:</p><blockquote><p>Monty avoids the cost, latency, complexity and general faff of using full container based sandbox for running LLM generated code.</p><p>Instead, it let's you safely run Python code written by an LLM embedded in your agent, with startup times measured in single digit microseconds not hundreds of milliseconds.</p><p>What Monty <strong>can</strong> do:</p><ul><li>Run a

4日前

An Update on Heroku

Simon Willison's Weblog

<p><strong><a href="https://www.heroku.com/blog/an-update-on-heroku/">An Update on Heroku</a></strong></p>An ominous headline to see on the official Heroku blog and yes, it's bad news.</p><blockquote><p>Today, Heroku is transitioning to a sustaining engineering model focused on stability, security, reliability, and support. Heroku remains an actively supported, production-ready platform, with an emphasis on maintaining quality and operational excellence rather than introducing new features. We know changes like this can raise questions, and we want to be clear about what this means for customers.</p></blockquote><p>Based on context I'm guessing a "sustaining engineering model" (this definitely isn't a widely used industry term) means that they'll keep the lights on and that's it.</p><p>This is a very frustrating piece of corporate communication. "We want to be clear about what this means for customers" - then proceeds to <em>not be clear</em> about what this means for customers.</p><p

4日前

Quoting Karel D'Oosterlinck

Simon Willison's Weblog

<blockquote cite="https://twitter.com/kareldoostrlnck/status/2019477361557926281"><p>When I want to quickly implement a one-off experiment in a part of the codebase I am unfamiliar with, I get codex to do extensive due diligence. Codex explores relevant slack channels, reads related discussions, fetches experimental branches from those discussions, and cherry picks useful changes for my experiment. All of this gets summarized in an extensive set of notes, with links back to where each piece of information was found. Using these notes, codex wires the experiment and makes a bunch of hyperparameter decisions I couldn’t possibly make without much more effort.</p></blockquote><p class="cite">— <a href="https://twitter.com/kareldoostrlnck/status/2019477361557926281">Karel D'Oosterlinck</a>, I spent $10,000 to automate my research at OpenAI with Codex</p> <p>Tags: <a href="https://simonwillison.net/tags/codex-cli">codex-cli</a>, <a href="https://simonwillison.net/tags/coding-agen

5日前

Mitchell Hashimoto: My AI Adoption Journey

Simon Willison's Weblog

<p><strong><a href="https://mitchellh.com/writing/my-ai-adoption-journey">Mitchell Hashimoto: My AI Adoption Journey</a></strong></p>Some really good and unconventional tips in here for getting to a place with coding agents where they demonstrably improve your workflow and productivity. I particularly liked:</p><ul><li><p><a href="https://mitchellh.com/writing/my-ai-adoption-journey#step-2-reproduce-your-own-work">Reproduce your own work</a> - when learning to use coding agents Mitchell went through a period of doing the work manually, then recreating the same solution using agents as an exercise:</p><blockquote><p>I literally did the work twice. I'd do the work manually, and then I'd fight an agent to produce identical results in terms of quality and function (without it being able to see my manual solution, of course).</p></blockquote></li><li><p><a href="https://mitchellh.com/writing/my-ai-adoption-journey#step-3-end-of-day-agents">End-of-day agents</a> - letting agents step in whe

5日前

Opus 4.6 and Codex 5.3

Simon Willison's Weblog

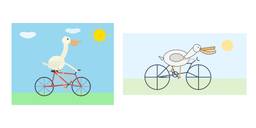

<p>Two major new model releases today, within about 15 minutes of each other.</p><p>Anthropic <a href="https://www.anthropic.com/news/claude-opus-4-6">released Opus 4.6</a>. Here's <a href="https://gist.github.com/simonw/a6806ce41b4c721e240a4548ecdbe216">its pelican</a>:</p><p><img alt="Slightly wonky bicycle frame but an excellent pelican, very clear beak and pouch, nice feathers." src="https://static.simonwillison.net/static/2026/opus-4.6-pelican.png" /></p><p>OpenAI <a href="https://openai.com/index/introducing-gpt-5-3-codex/">release GPT-5.3-Codex</a>, albeit only via their Codex app, not yet in their API. Here's <a href="https://gist.github.com/simonw/bfc4a83f588ac762c773679c0d1e034b">its pelican</a>:</p><p><img alt="Not nearly as good - the bicycle is a bit mangled, the pelican not nearly as well rendered - it's more of a line drawing." src="https://static.simonwillison.net/static/2026/codex-5.3-pelican.png" /></p><p>I've had a bit of preview access to both of these models and t

5日前

Spotlighting The World Factbook as We Bid a Fond Farewell

Simon Willison's Weblog

<p><strong><a href="https://www.cia.gov/stories/story/spotlighting-the-world-factbook-as-we-bid-a-fond-farewell/">Spotlighting The World Factbook as We Bid a Fond Farewell</a></strong></p>Somewhat devastating news today from CIA:</p><blockquote><p>One of CIA’s oldest and most recognizable intelligence publications, The World Factbook, has sunset.</p></blockquote><p>There's not even a hint as to <em>why</em> they decided to stop maintaining this publication, which has been their most useful public-facing initiative since 1971 and a cornerstone of the public internet since 1997.</p><p>In a bizarre act of cultural vandalism they've not just removed the entire site (including the archives of previous versions) but they've also set every single page to be a 302 redirect to their closure announcement.</p><p>The Factbook has been released into the public domain since the start. There's no reason not to continue to serve archived versions - a banner at the top of the page saying it's no longe

6日前

Voxtral transcribes at the speed of sound

Simon Willison's Weblog

<p><strong><a href="https://mistral.ai/news/voxtral-transcribe-2">Voxtral transcribes at the speed of sound</a></strong></p>Mistral just released Voxtral Transcribe 2 - a family of two new models, one open weights, for transcribing audio to text. This is the latest in their Whisper-like model family, and a sequel to the original Voxtral which they released <a href="https://simonwillison.net/2025/Jul/16/voxtral/">in July 2025</a>.</p><p>Voxtral Realtime - official name <code>Voxtral-Mini-4B-Realtime-2602</code> - is the open weights (Apache-2.0) model, available as a <a href="https://huggingface.co/mistralai/Voxtral-Mini-4B-Realtime-2602">8.87GB download from Hugging Face</a>.</p><p>You can try it out in this <a href="https://huggingface.co/spaces/mistralai/Voxtral-Mini-Realtime">live demo</a> - don't be put off by the "No microphone found" message, clicking "Record" should have your browser request permission and then start the demo working. I was very impressed by the demo - I talked

6日前

Distributing Go binaries like sqlite-scanner through PyPI using go-to-wheel

Simon Willison's Weblog

<p>I've been exploring Go for building small, fast and self-contained binary applications recently. I'm enjoying how there's generally one obvious way to do things and the resulting code is boring and readable - and something that LLMs are very competent at writing. The one catch is distribution, but it turns out publishing Go binaries to PyPI means any Go binary can be just a <code>uvx package-name</code> call away.</p><h4 id="sqlite-scanner">sqlite-scanner</h4><p><a href="https://github.com/simonw/sqlite-scanner">sqlite-scanner</a> is my new Go CLI tool for scanning a filesystem for SQLite database files.</p><p>It works by checking if the first 16 bytes of the file exactly match the SQLite magic number sequence <code>SQLite format 3\x00</code>. It can search one or more folders recursively, spinning up concurrent goroutines to accelerate the scan. It streams out results as it finds them in plain text, JSON or newline-delimited JSON. It can optionally display the file sizes as well.<

6日前

Introducing Deno Sandbox

Simon Willison's Weblog

<p><strong><a href="https://deno.com/blog/introducing-deno-sandbox">Introducing Deno Sandbox</a></strong></p>Here's a new hosted sandbox product from the Deno team. It's actually unrelated to Deno itself - this is part of their Deno Deploy SaaS platform. As such, you don't even need to use JavaScript to access it - you can create and execute code in a hosted sandbox using their <a href="https://pypi.org/project/deno-sandbox/">deno-sandbox</a> Python library like this:</p><div class="highlight highlight-source-shell"><pre><span class="pl-k">export</span> DENO_DEPLOY_TOKEN=<span class="pl-s"><span class="pl-pds">"</span>... API token ...<span class="pl-pds">"</span></span>uv run --with deno-sandbox python</pre></div><p>Then:</p><pre><span class="pl-k">from</span> <span class="pl-s1">deno_sandbox</span> <span class="pl-k">import</span> <span class="pl-v">DenoDeploy</span><span class="pl-s1">sdk</span> <span class="pl-c1">=</span> <span class="pl-en">DenoDeploy</span>()<span class="pl-k">

7日前

January sponsors-only newsletter is out

Simon Willison's Weblog

<p>I just sent the January edition of my <a href="https://github.com/sponsors/simonw/">sponsors-only monthly newsletter</a>. If you are a sponsor (or if you start a sponsorship now) you can <a href="https://github.com/simonw-private/monthly/blob/main/2026-01-january.md">access it here</a>. In the newsletter for January:</p><ul><li>LLM predictions for 2026</li><li>Coding agents get even more attention</li><li>Clawdbot/Moltbot/OpenClaw went very viral</li><li>Kakapo breeding season is off to a really strong start</li><li>New options for sandboxes</li><li>Web browsers are the "hello world" of coding agent swarms</li><li>Sam Altman addressed the Jevons paradox for software engineering</li><li>Model releases and miscellaneous extras</li></ul><p>Here's <a href="https://gist.github.com/simonw/13e595a236218afce002e9aeafd75cd0">a copy of the December newsletter</a> as a preview of what you'll get. Pay $10/month to stay a month ahead of the free copy!</p> <p>Tags: <a href="https://simonwillison

7日前

Quoting Brandon Sanderson

Simon Willison's Weblog

<blockquote cite="https://www.youtube.com/watch?v=mb3uK-_QkOo&t=832s"><p>This is the difference between Data and a large language model, at least the ones operating right now. Data created art because he wanted to grow. He wanted to become something. He wanted to understand. Art is the means by which we become what we want to be. [...]</p><p>The book, the painting, the film script is not the only art. It's important, but in a way it's a receipt. It's a diploma. The book you write, the painting you create, the music you compose is important and artistic, but it's also a mark of proof that you have done the work to learn, because in the end of it all, you are the art. The most important change made by an artistic endeavor is the change it makes in you. The most important emotions are the ones you feel when writing that story and holding the completed work. I don't care if the AI can create something that is better than what we can create, because it cannot be changed by that creatio

8日前

Introducing the Codex app

Simon Willison's Weblog

<p><strong><a href="https://openai.com/index/introducing-the-codex-app/">Introducing the Codex app</a></strong></p>OpenAI just released a new macOS app for their Codex coding agent. I've had a few days of preview access - it's a solid app that provides a nice UI over the capabilities of the Codex CLI agent and adds some interesting new features, most notably first-class support for <a href="https://developers.openai.com/codex/skills">Skills</a>, and <a href="https://developers.openai.com/codex/app/automations">Automations</a> for running scheduled tasks.</p><p><img alt="Screenshot of a macOS desktop application with a dark sidebar and light main content area. Left sidebar shows navigation items "New thread", "Automations", "Skills", and a "Threads" section containing two project folders: "local-codex-scratch" with tasks "Reply to greeting task 2h" and "List Codex.app contents 3h", and "shot-scraper" with t

8日前

A Social Network for A.I. Bots Only. No Humans Allowed.

Simon Willison's Weblog

<p><strong><a href="https://www.nytimes.com/2026/02/02/technology/moltbook-ai-social-media.html?unlocked_article_code=1.JFA.kBCd.hUw-s4vvfswK&smid=url-share">A Social Network for A.I. Bots Only. No Humans Allowed.</a></strong></p>I talked to Cade Metz for this New York Times piece on OpenClaw and Moltbook. Cade reached out after seeing my <a href="https://simonwillison.net/2026/Jan/30/moltbook/">blog post about that</a> from the other day.</p><p>In a first for me, they decided to send a photographer, Jason Henry, to my home to take some photos for the piece! That's my grubby laptop screen at the top of the story (showing <a href="https://www.moltbook.com/post/6e8c3a2c-5f9f-44bc-85ef-770a8d605598">this post</a> on Moltbook). There's a photo of me later in the story too, though sadly not one of the ones that Jason took that included our chickens.</p><p>Here's my snippet from the article:</p><blockquote><p>He was entertained by the way the bots coaxed each other into talking like mac

8日前

TIL: Running OpenClaw in Docker

Simon Willison's Weblog

<p><strong><a href="https://til.simonwillison.net/llms/openclaw-docker">TIL: Running OpenClaw in Docker</a></strong></p>I've been running <a href="https://openclaw.ai/">OpenClaw</a> using Docker on my Mac. Here are the first in my ongoing notes on how I set that up and the commands I'm using to administer it.</p><ul><li><a href="https://til.simonwillison.net/llms/openclaw-docker#use-their-docker-compose-configuration">Use their Docker Compose configuration</a></li><li><a href="https://til.simonwillison.net/llms/openclaw-docker#answering-all-of-those-questions">Answering all of those questions</a></li><li><a href="https://til.simonwillison.net/llms/openclaw-docker#running-administrative-commands">Running administrative commands</a></li><li><a href="https://til.simonwillison.net/llms/openclaw-docker#setting-up-a-telegram-bot">Setting up a Telegram bot</a></li><li><a href="https://til.simonwillison.net/llms/openclaw-docker#accessing-the-web-ui">Accessing the web UI</a></li><li><a href="h

9日前

Quoting Andrej Karpathy

Simon Willison's Weblog

<blockquote cite="https://twitter.com/karpathy/status/2017703360393318587"><p>Originally in 2019, GPT-2 was trained by OpenAI on 32 TPU v3 chips for 168 hours (7 days), with $8/hour/TPUv3 back then, for a total cost of approx. $43K. It achieves 0.256525 CORE score, which is an ensemble metric introduced in the DCLM paper over 22 evaluations like ARC/MMLU/etc.</p><p>As of the last few improvements merged into nanochat (many of them originating in modded-nanogpt repo), I can now reach a higher CORE score in 3.04 hours (~$73) on a single 8XH100 node. This is a 600X cost reduction over 7 years, i.e. the cost to train GPT-2 is falling approximately 2.5X every year.</p></blockquote><p class="cite">— <a href="https://twitter.com/karpathy/status/2017703360393318587">Andrej Karpathy</a></p> <p>Tags: <a href="https://simonwillison.net/tags/andrej-karpathy">andrej-karpathy</a>, <a href="https://simonwillison.net/tags/gpt-2">gpt-2</a>, <a href="https://simonwillison.net/tags/generative-ai">

10日前

Singing the gospel of collective efficacy

Simon Willison's Weblog

<p><strong><a href="https://interconnected.org/home/2026/01/30/efficacy">Singing the gospel of collective efficacy</a></strong></p>Lovely piece from Matt Webb about how you can "just do things" to help make your community better for everyone:</p><blockquote><p>Similarly we all love when the swifts visit (beautiful birds), so somebody started a group to get swift nest boxes made and installed collectively, then applied for subsidy funding, then got everyone to chip in such that people who couldn’t afford it could have their boxes paid for, and now suddenly we’re all writing to MPs and following the legislation to include swift nesting sites in new build houses. Etc.</p><p>It’s called <em>collective efficacy</em>, the belief that you can make a difference by acting together.</p></blockquote><p>My current favorite "you can just do things" is a bit of a stretch, but apparently you can just build a successful software company for 20 years and then use the proceeds to <a href="https://bmore

11日前

Quoting Steve Yegge

Simon Willison's Weblog

<blockquote cite="https://steve-yegge.medium.com/software-survival-3-0-97a2a6255f7b"><p>Getting agents using Beads requires much less prompting, because Beads now has 4 months of “Desire Paths” design, which I’ve talked about before. Beads has evolved a very complex command-line interface, with 100+ subcommands, each with many sub-subcommands, aliases, alternate syntaxes, and other affordances.</p><p>The complicated Beads CLI isn’t for humans; it’s for agents. What I did was make their hallucinations real, over and over, by implementing whatever I saw the agents trying to do with Beads, until nearly every guess by an agent is now correct.</p></blockquote><p class="cite">— <a href="https://steve-yegge.medium.com/software-survival-3-0-97a2a6255f7b">Steve Yegge</a>, Software Survival 3.0</p> <p>Tags: <a href="https://simonwillison.net/tags/steve-yegge">steve-yegge</a>, <a href="https://simonwillison.net/tags/coding-agents">coding-agents</a>, <a href="https://simonwillison.net/tags/

11日前

Moltbook is the most interesting place on the internet right now

Simon Willison's Weblog

<p>The hottest project in AI right now is Clawdbot, <a href="https://x.com/openclaw/status/2016058924403753024">renamed to Moltbot</a>, <a href="https://openclaw.ai/blog/introducing-openclaw">renamed to OpenClaw</a>. It's an open source implementation of the digital personal assistant pattern, built by Peter Steinberger to integrate with the messaging system of your choice. It's two months old, has over 114,000 stars <a href="https://github.com/openclaw/openclaw">on GitHub</a> and is seeing incredible adoption, especially given the friction involved in setting it up.</p><p>(Given the <a href="https://x.com/rahulsood/status/2015397582105969106">inherent risk of prompt injection</a> against this class of software it's my current pick for <a href="https://simonwillison.net/2026/Jan/8/llm-predictions-for-2026/#1-year-a-challenger-disaster-for-coding-agent-security">most likely to result in a Challenger disaster</a>, but I'm going to put that aside for the moment.)</p><p>OpenClaw is built

11日前

We gotta talk about AI as a programming tool for the arts

Simon Willison's Weblog

<p><strong><a href="https://www.tiktok.com/@chris_ashworth/video/7600801037292768525">We gotta talk about AI as a programming tool for the arts</a></strong></p>Chris Ashworth is the creator and CEO of <a href="https://en.wikipedia.org/wiki/QLab">QLab</a>, a macOS software package for “cue-based, multimedia playback” which is designed to automate lighting and audio for live theater productions.</p><p>I recently started following him on TikTok where he posts about his business and theater automation in general - Chris founded <a href="https://voxel.org/faq/">the Voxel</a> theater in Baltimore which QLab use as a combined performance venue, teaching hub and research lab (here's <a href="https://bmoreart.com/2024/09/the-voxel-is-a-cutting-edge-theater-experiment.html">a profile of the theater</a>), and the resulting videos offer a fascinating glimpse into a world I know virtually nothing about.</p><p><a href="https://www.tiktok.com/@chris_ashworth/video/7600801037292768525">This latest Ti

11日前

Datasette 1.0a24

Simon Willison's Weblog

<p><strong><a href="https://docs.datasette.io/en/latest/changelog.html#a24-2026-01-29">Datasette 1.0a24</a></strong></p>New Datasette alpha this morning. Key new features:</p><ul><li>Datasette's <code>Request</code> object can now handle <code>multipart/form-data</code> file uploads via the new <a href="https://docs.datasette.io/en/latest/internals.html#internals-formdata">await request.form(files=True)</a> method. I plan to use this for a <code>datasette-files</code> plugin to support attaching files to rows of data.</li><li>The <a href="https://docs.datasette.io/en/latest/contributing.html#setting-up-a-development-environment">recommended development environment</a> for hacking on Datasette itself now uses <a href="https://github.com/astral-sh/uv">uv</a>. Crucially, you can clone Datasette and run <code>uv run pytest</code> to run the tests without needing to manually create a virtual environment or install dependencies first, thanks to the <a href="https://til.simonwillison.net/uv/

12日前

Adding dynamic features to an aggressively cached website

Simon Willison's Weblog

<p>My blog uses aggressive caching: it sits behind Cloudflare with a 15 minute cache header, which guarantees it can survive even the largest traffic spike to any given page. I've recently added a couple of dynamic features that work in spite of that full-page caching. Here's how those work.</p><h4 id="edit-links-that-are-visible-only-to-me">Edit links that are visible only to me</h4><p>This is a Django site and I manage it through the Django admin.</p><p>I have <a href="https://github.com/simonw/simonwillisonblog/blob/b8066f870a94d149f5e8cee6e787d3377c0b9507/blog/models.py#L254-L449">four types of content</a> - entries, link posts (aka blogmarks), quotations and notes. Each of those has a different model and hence a different Django admin area.</p><p>I wanted an "edit" link on the public pages that was only visible to me.</p><p>The button looks like this:</p><p><img src="https://static.simonwillison.net/static/2026/edit-link.jpg" alt="Entry footer - it says Posted 27th January 2026 a

13日前